Deep Learning (DL) is used in digital image processing to solve difficult problems (e.g., image colorization, classification, segmentation, and detection). Deep learning methods like Convolutional Neural Networks (CNNs) have pushed the boundaries of what is possible by improving prediction performance using big data and abundant computing resources.

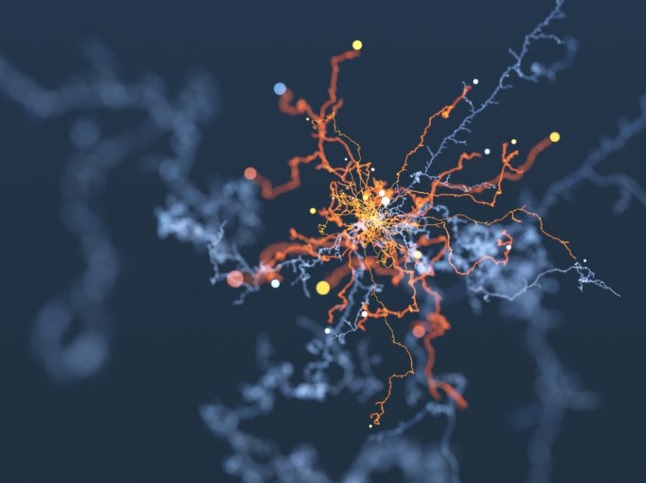

DL is a subset of machine learning. Artificial Neural Networks (ANNs), a computing paradigm inspired by the functioning of the human brain, are at the heart of Deep Learning. It comprises many computing cells, or ‘neurons,’ that perform a simple operation and interact with one another to make a decision, just like the human brain. Deep Learning is all about accurately, efficiently, and without supervision learning or ‘credit assignment’ across many neural network layers.

It is of recent interest due to enabling advancements in processing hardware. Self-organization and the exploitation of interactions between small units have proven to perform better than central control, particularly for complex non-linear process models. Better fault tolerance and adaptability to new data are achievable.

Is DL causing traditional Computer Vision (CV) techniques to become obsolete? Has deep learning displaced traditional computer vision? Is it still necessary to research traditional CV techniques when DL appears to be so effective? These are all questions brought up in the community in recent years. This post will provide a comparison of deep learning and traditional computer vision.

Advantages of Deep Learning

Rapid advances in deep learning and device capabilities, such as computing power, memory capacity, image sensor resolution, power consumption, and optics, have improved the performance and cost-effectiveness of vision-based applications, hastening their spread. DL allows CV engineers to achieve greater accuracy in image classification, semantic segmentation, object detection, and Simultaneous Localization and mapping compared to traditional CV techniques (SLAM). Because DL applications use trained rather than programmed neural networks. They often require less expert analysis and fine-tuning and can take advantage of the massive video data available in today’s systems. In contrast to more domain-specific CV algorithms, DL algorithms provide superior flexibility because CNN models and frameworks can be re-trained using a custom dataset for any use case.

We can compare the two types of computer vision algorithms using the problem of object detection on a mobile robot as an example: For object detection, the traditional approach is to use well-established CV techniques like feature descriptors (SIFT, SURF, BRIEF, and so on). Feature extraction was used for tasks like image classification before the advent of DL. Features are small “interesting,” descriptive, or informative patches in images. This step could involve a variety of CV algorithms, such as edge detection, corner detection, or threshold segmentation. Images are analyzed for as many features as possible, and these features are used to create a definition (known as a bag of words) for each object class. These definitions are searched for in other images during the deployment stage. When many features from one bag of words appear in another image, the image is classified as containing that particular object (i.e., chair, horse, etc.).

The problem with the traditional approach is that it requires deciding which features are important in each image. Feature extraction becomes increasingly difficult as the number of classes to classify grows. To determine which features best describe different classes of objects, the CV engineer must use his or her judgment and go through a lengthy trial and error process. Furthermore, each feature definition necessitates the management of numerous parameters, all of which the CV engineer must fine-tune. End-to-end learning was introduced by DL, in which the machine is simply given a dataset of images annotated with the classes of objects present in each image. Neural networks discover the underlying patterns in classes of images and automatically work out the most descriptive and salient features concerning each object’s specific object class when a DL model is ‘trained’ on the given data.

DNNs have long been known to outperform traditional algorithms, albeit with trade-offs in computing requirements and training time. With all state-of-the-art CV approaches using this methodology, the CV engineer’s workflow has changed dramatically, with knowledge and expertise in extracting hand-crafted features replaced by knowledge and expertise in iterating through deep learning architectures.

CNN development has had a huge impact on CV in recent years and is responsible for a significant increase in the ability to recognize objects. An increase in computing power and the amount of data available for training neural networks have enabled this surge in progress. The seminal paper ImageNet Classification with Deep Convolutional Neural Networks has been cited over 3000 times, indicating the recent explosion and widespread adoption of various deep-neural network architectures for CV.

CNNs use kernels (also known as filters) to detect features (such as edges) throughout an image. A kernel is simply a weighted matrix of values that has been trained to detect specific features. The main idea behind CNNs, as their name suggests, is to spatially convolve the kernel on a given input image to see if the feature it’s supposed to detect is present. A convolution operation is performed by computing the dot product of the kernel and the input area where the kernel is overlapped to provide a value representing how confident it is that a specific feature is present (the area of the original image the kernel is looking at is known as the receptive field). The output of the convolution layer is summed with a bias term and then fed to a non-linear activation function to aid in the learning of kernel weights. Non-linear functions such as Sigmoid, TanH, and ReLU are commonly used as activation functions (Rectified Linear Unit).

These activation functions are chosen based on the nature of the data and classification tasks. ReLUs, for example, are thought to have a higher biological representation (neurons in the brain either fire or don’t). As a result, it produces sparser, more efficient representations and is less susceptible to the vanishing gradient problem, resulting in better results for image recognition tasks.

A pooling layer frequently follows the convolutional layer to remove redundancy in the input feature, which speeds up the training process and reduces the amount of memory consumed by the network. Max-pooling, for example, moves a window over the input and outputs the maximum value in that window, effectively reducing the image’s important pixels. Convolutional and pooling layers can be found in multiple pairs in deep CNNs. Finally, a Fully Connected layer flattens the volume of the previous layer into a feature vector, followed by an output layer that uses a dense network to compute the scores (confidence or probabilities) for the output classes/features. This data is then fed into a regression function like Softmax, which maps everything to a vector with one element.

However, DL is still primarily a CV tool; the most common neural network in CV, for example, is CNN. What exactly is a convolution, though? It’s a popular image processing method (e.g., Sobel edge detection). The benefits of DL are obvious, and a review of the state-of-the-art is beyond the scope of this article. DL is not a panacea for all problems; there are some problems and applications where more traditional CV algorithms are more appropriate.

Advantages of Traditional Computer Vision

The traditional feature-based approaches such as those listed below are useful in improving performance in CV tasks:

- Scale Invariant Feature Transform (SIFT).

- Speeded Up Robust Features (SURF)

- Features from Accelerated Segment Test (FAST)

- Hough transforms

- Geometric hashing

Feature descriptors like SIFT and SURF are generally combined with traditional machine learning classification algorithms such as Support Vector Machines and KNearest Neighbours to solve the CV problems.

Traditional CV techniques can often solve a problem much more efficiently and with fewer lines of code than DL, so DL is sometimes overkill. SIFT and even simple color thresholding and pixel counting algorithms are not class-specific; they work on any image. Features learned from a deep neural net, on the other hand, are specific to your training dataset, which, if poorly constructed, is unlikely to perform well for images other than the training set. As a result, image stitching/3D mesh reconstruction is frequently performed using SIFT and other algorithms, which do not require specific class knowledge. Large datasets can be used to accomplish these tasks. However, this necessitates a significant amount of research, which is not feasible for a closed application. Use common sense when deciding which path to take for a particular CV application.

For example, on an assembly line conveyor belt, classify two product classes, one with red paint and the other with blue paint. A deep neural network will work if enough data can be collected to train. On the other hand, simple color thresholding can achieve the same result. Simpler and faster methods can be used to solve some problems.

What happens if a DNN fails to perform well outside the training data? If the training dataset is too small, the machine may overfit the data and be unable to generalize to the task at hand. Because a DNN has millions of parameters with complex interrelationships, manually tweaking the model’s parameters would be too difficult. DL models have been criticized as a black box in this way. Traditional CVs are completely transparent, allowing you to assess whether your solution will work outside of a training set. If anything goes wrong, the parameters can be tweaked to perform well for a wider range of images.

When the problem can be simplified to be deployed on low-cost microcontrollers or to limit the problem for deep learning techniques by highlighting certain features in data, augmenting data, or assisting in dataset annotation, traditional techniques are used today. We’ll go over various image transformation techniques later in this paper to help you improve your neural net training. Finally, many more difficult problems in CV, such as robotics, augmented reality, automatic panorama stitching, virtual reality, 3D modeling, motion estimation, video stabilization, motion capture, video processing, and scene understanding, cannot be easily solved with deep learning but benefit from solutions using “traditional” techniques.

![No-code machine learning tools for non-programmers [Updated] machine-learning](https://roboticsbiz.com/wp-content/uploads/2019/08/machine-learning-218x150.jpg)

![Top 10 Machine Learning Youtube channels to follow [Updated] youtube](https://roboticsbiz.com/wp-content/uploads/2019/05/youtube-218x150.jpg)